On this page:

The Existing System

Once I had a DOS system set up, I started poking around the files I was given. I wasn’t given much instruction on the system; it was a lot of time to ask from volunteers, and largely, I wasn’t interested in running it – my goal was the data files.

The USB drive I was handed had several files,

two of which had .EXE suffixes: RODEO.EXE and RODEO2.EXE.

I started up the first RODEO.EXE and was met with a password prompt.

I had been given the password, but it didn’t work.

At this point, I wasn’t even certain my VM setup would work,

so I wasn’t sure if the problem was me or the password.

But I did have a guess about what 1986’s security posture likely entailed, and so I took a stab at it this way (on my host machine, to be clear):

|

After a few tries, I was in.

The application presents a series of prompts prefixed by numbers, and it allows navigation between screen by entering the corresponding number. As you might imagine, it includes options to add new members, view existing members (by last name or membership number), enter rodeo registration details, record event results, and print standings reports.

Looking at the other files it uses gives a hint to how it works:

PERSONEL.DBFNAMENDX.NTXIGRANDX.NTXEVENTS.DBFEVENTZ.DBFAZEVENTS.DBFAZINDEX1.NTXAZINDEX2.NTXTITLES.DBFSTATES.DBFRIBBONS.DBFRIBBONS2.DBF

What is a DBF File?

In the 1980s, dBase was a popular database management system

that included programming language to build applications with terminal UIs.

It was so popular, in fact, that it lead to a large 3rd-party community,

which looked to standardize the basics of the language and storage format.

In an effort to avoid trademark violations,

these collectively came to be known as xBase applications.

An xBase program works by manipulating DBF files,

which is essentially a single database table.

A complex application can consists of many such files,

in addition to index files and “memo” extensions (for larger blobs).

Overall, the application experience is like

working with an interactive script

that reads and writes special binary files

expected to live in the same directory.

That’s not completely crazy – after all, that’s how git works!

The presence of DBF files means we’re working with some xBase program,

and the presence of the NTX files

suggests this was made with Clipper,

an xBase compiler targeting MS-DOS.

There are many existing modern programs that can handle the DBF format: In fact, LibreOffice and Excel can read and write them just fine. But my major concern with this approach were the specifics: if I wanted to write files, particularly those proprietary indexes, would content generated by those applications be suitable? Using these would have to be part of some larger pipeline anyway, since the ultimate goal is to compare data with the staff’s existing files. How would I package this up and deliver it in a way they could easily run? Instead, I would need a standalone application that could interact with them.

Parsing DBF with Rust

After seeing the format details listed on Wikipedia,

I decided I wanted to write my own parser.

There already exist multiple Rust implementations of DBF parsers,

but this seemed like a good project to use to get some experience:

- The format is relatively simple.

- I don’t need to worry about my code’s general

xBasecompatibility. - I can work around it if the rodeo application’s underlying implementation does not fit the standard.

The Wikipedia entry was a good starting point to understand the DBF structure,

but I found this description from Erik Bachmann

to be far more useful.

At a high-level, the file starts with a header describing the overall contents, followed by a list of field descriptors for the individual columns, then a list of packed records, each beginning with a “deleted” marker. Each record contains a value for each field described in the header, all encoded as ASCII (in the especially old version used by this application).

I used the binary-layout crate to declare the structures. You can see them context on GitHub, but I’ve copied them below:

define_layout!;

define_layout!;

define_layout!;

A few notes:

- The

last_updatedwas originally relative 1900, but now that’s a Y2K bug; other dates use an 8-characterYYYYMMDDformat. - The

flagsstructure is made up of several bits indicating theDBFversion and whether there are certain supporting files, but I found all my files started with0x03, representing the most basic kind ofDBFfile. - The

n_record_bytesshould be the sum of lengths of each record field, plus and additional 1 byte for the record-deleted flag.

Index Issues

The code online additionally defines the layout for

the Clipper Index Files (the NTX files – a modified B+tree),

but the actual code to read and write indexes is not part of the release.

When I originally wrote the code to work with the indexes,

I tested the results against the application running in DOS.

Although the program would read and use my indexes without issue,

I found that if I used the rodeo application to delete a record,

it would corrupt the index.

I spent days debugging this issue.

Not to spoil it, but the ending is rather unsatisfying:

it is apparently just a known bug in the version of Clipper

used to compile the application.

In fact, Clipper and other xBase applications

were pretty notorious for index corruption,

so much so that they commonly needed manual re-indexing.

I later learned of a INDEX.EXE program used by the staff,

and they told me they actively avoid deleting records

to help prevent issues.

As an aside,

I based the original implementation on details from Erik Bachmann,

and after scratching my head long enough, tracked down the book

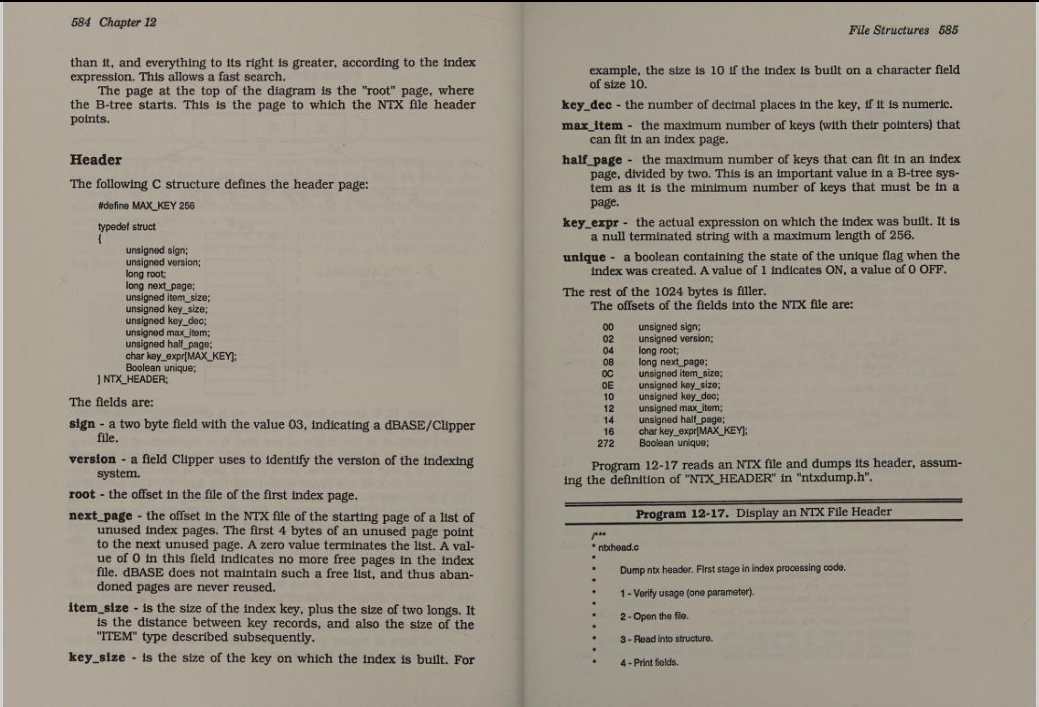

“Clipper Programming Guide” by Rick Spence, a Co-Developer of Clipper.

The book gives the NTX_HEADER as a typedef struct written in C

(Chapter 12, page 584),

and it closely matches Bachmann’s version;

however, Spence’s list of byte offsets for each field

are written in base-16,

except the offset for the unique field has been miscalculated:

the field should follow 256 bytes after the key_expr,

which starts at offset .

It should begin at

(as Bachmann correctly indicates),

but it appears as , likely from the mistaken

rather than

,

mixing up base-16 and base-10.

Moving On

My DBF implementation handles the primary datatypes,

though it only nominally handles Memo fields.

Those are used to store character strings longer than 256 bytes,

but they aren’t used in this application.

The DBF values are string-encoded;

though they all have a maximum size,

some are null-terminated,

while others are padded with spaces.

I’m not certain if that’s an implementation detail

or if it’s simply a consequence of how the rodeo application works

(navigating from one field to the next

appears to implicitly fill the remaining space).

In any case, it made sense to have a function

to find the value boundaries with these restrictions in mind.

With the struct definitions above, reading and writing a FieldDescriptor is simple:

Reading the actual field values is not bad for most of the types.

Characters don’t need conversion,

Booleans are easy to match,

Float and Memo (a uint64) are just delegated to Rust’s parsers,

and I make use of Chrono’s NaiveDate for dates.

The only somewhat complex one is Numeric, which can represent decimal types.

The code checks for a decimal point, and if present, and splits it apart,

and converts the integer and fractional components separately.

Internally, it holds the mantissa and exponent.

Writing field values is mostly a matter of picking the right format string.

The code includes a TableReader and TableWriter

written in a Typestate Pattern

since I need to ensure the headers are read/written in the right order.

The reader gives an iterator over the records,

which themselves are iterators over the fields of a record.

The current implementation is completely stream-based.

The current writer implementation relies too much on the caller though: to make it work with the code I had at the time, I expect the caller to just provide a list of records that can all be written using the same descriptors. It doesn’t actually validate that the fields are given consistently, though, and I’d rather it not be part of the runtime implementation. By the time I’d written the writer code, I was more focused on validating the incoming registration and presenting the results. Having the ability to write records may be useful for better integration, but remember that the existing system is supposed to go away, and soon; I added it so that I could generate tables filled with random records in order to verify and demonstrate the application’s use.